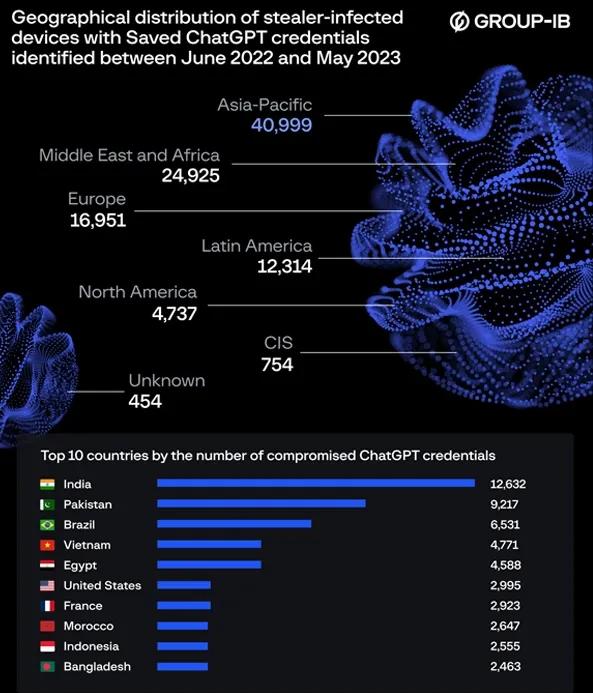

Between June 2022 and May 2023, a total of 101,100 OpenAI ChatGPT account credentials were compromised and subsequently made available on the dark web marketplaces. Of these, 12,632 were stolen from accounts located in India. The discovery of these credentials was made by Group-IB, who reported that they were found within information stealer logs that were being sold on the cybercrime underground. According to the report shared with The Hacker News, the number of available logs containing compromised ChatGPT accounts reached a peak of 26,802 in May 2023. The Asia-Pacific region was identified as the area with the highest concentration of ChatGPT credentials being offered for sale over the past year.

The nations that have the highest count of compromised ChatGPT credentials are Pakistan, Brazil, Vietnam, Egypt, the United States, France, Morocco, Indonesia, and Bangladesh.

Upon conducting a more comprehensive examination, it has been ascertained that a significant proportion of logs that encompass ChatGPT accounts have been compromised by the infamous Raccoon information stealer (78,348), trailed by Vidar (12,984) and RedLine (6,773). The prevalence of information stealers among cybercriminals has escalated due to their proficiency in commandeering passwords, cookies, credit cards, and other pertinent data from browsers, as well as cryptocurrency wallet extensions.

According to Group-IB, logs that contain compromised information obtained by information stealers are actively traded on dark web marketplaces. These markets offer additional information about the logs, including lists of domains found in the log and information about the IP address of the compromised host. These logs are typically offered based on a subscription-based pricing model, which not only lowers the bar for cybercrime but also serves as a conduit for launching follow-on attacks using the siphoned credentials. Dmitry Shestakov, head of threat intelligence at Group-IB, stated that many enterprises are integrating ChatGPT into their operational flow.

It is imperative that employees either input classified correspondences or utilize the bot to optimize proprietary code. It is important to note that ChatGPT's standard configuration retains all conversations, which could inadvertently provide a wealth of sensitive intelligence to threat actors in the event that they obtain account credentials.

To mitigate such risks, it is strongly recommended that users adhere to appropriate password hygiene practices and secure their accounts with two-factor authentication (2FA) to prevent account takeover attacks.

This development arises amidst an ongoing malware campaign that is exploiting fake OnlyFans pages and adult content lures to distribute a remote access trojan and an information stealer known as DCRat (or DarkCrystal RAT), which is a modified version of AsyncRAT.

How does the stealer malware spread

According to eSentire researchers, victims have been enticed into downloading ZIP files containing a VBScript loader, which is then manually executed. This activity has been ongoing since January 2023. The file naming convention indicates that the victims were lured using explicit photos or OnlyFans content featuring various adult film actresses.

Furthermore, a new VBScript variant of the malware GuLoader (also known as CloudEyE) has been discovered. This variant employs tax-themed decoys to launch PowerShell scripts capable of retrieving and injecting Remcos RAT into a legitimate Windows process.

GuLoader is a highly evasive malware loader that is commonly used to deliver info-stealers and Remote Administration Tools (RATs). As stated in a report published earlier this month by the Canadian cybersecurity company, GuLoader leverages user-initiated scripts or shortcut files to execute multiple rounds of highly obfuscated commands and encrypted shellcode. The result is a memory-resident malware payload operating inside a legitimate Windows process.